Abstract

|

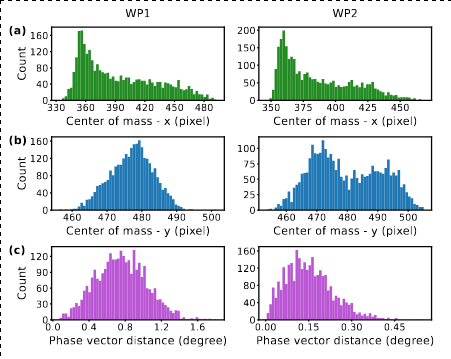

Modeling of large-scale research facilities is extremely challenging due to complex physical processes and engineering problems. Here, we adopt a data-driven approach to model the longitudinal phase-space-diagnostic beamline at the photoinector of the European XFEL with an encoder-decoder neural-network model. A deep convolutional neural network (decoder) is used to build images, measured on the screen, from a small feature map generated by another neural network (encoder). We demonstrate that the model, trained only with experimental data, can make high-fidelity predictions of megapixel images for the longitudinal phase-space measurement without any prior knowledge of photoinjectors or electron beams. The prediction significantly outperforms existing methods. We also show the scalability and interpretability of the model by sharing the same decoder with more than one encoder, used for different setups of the photoinjector, and propose a pragmatic way to model a facility with various diagnostics and working points. This opens the door to a way of accurately modeling a photoinjector using neural networks and experimental data. The approach can possibly be extended to the whole accelerator and even other types of scientific facility. |

J. Zhu et al., High-Fidelity Prediction of Megapixel Longitudinal Phase-Space Images of Electron Beams Using Encoder-Decoder Neural Networks, Phys. Rev. Applied